Changelog_

With access to the AgentOS instance within lifespan functions, you can now modify configurations, add agents, or resync settings after initialization, giving you greater flexibility and control over your AgentOS environment.

from agno.os import AgentOS

from contextlib import asynccontextmanager

@asynccontextmanager

async def lifespan(app, agent_os):

# Access the AgentOS instance

# Update configurations dynamically

agent_os.agents.append(new_agent)

agent_os.resync(app=app)

yield

agent_os = AgentOS(

lifespan=lifespan,

agents=[agent1]

)

This is useful for runtime configuration updates and dynamic agent management.

Keep your agent conversations efficient and relevant by controlling how much history is loaded. With num_history_messages, you can now fine-tune exactly how many messages from previous sessions are included in context.

from agno.agent import Agent

agent = Agent(

num_history_messages=10, # Only load last 10 messages from history

add_history_to_context=True

)

This helps manage context window size and optimize token usage in long-running conversations.

Learn more in the sessions documentation.

Make your agents faster and more efficient with asynchronous MongoDB access. Using the AsyncMongoDb class, your agents can now perform database operations without blocking, improving performance in async workflows and handling more requests concurrently.

from agno.agent import Agent

from agno.db.mongo import AsyncMongoDb

agent = Agent(

db=AsyncMongoDb(

db_name="agno_db",

connection_string="mongodb://localhost:27017"

)

)

response = await agent.arun("Process this request")

View the docs to learn more about async MongoDB

With Claude Skills support, your agents can now handle complex reasoning, execute code, interact with tools, and work with common office documents.

Agno agents with Claude capabilities can go far beyond what prompts alone can achieve. They can create PowerPoint presentations, Excel spreadsheets, and Word documents, as well as analyze PDFs. You can also create custom skills for Claude to use.

Claude Skills also gives your agents access to filesystem-based resources loaded on demand, eliminating the need to repeat the same guidance. This makes your agents more efficient, powerful, and versatile, enabling them to automate workflows, perform sophisticated analysis, and deliver richer responses.

from agno.agent import Agent

from agno.models.anthropic import Claude

agent = Agent(

model=Claude(

id="claude-sonnet-4-5-20250929",

skills=[

{"type": "anthropic", "skill_id": "pptx", "version": "latest"},

{"type": "anthropic", "skill_id": "xlsx", "version": "latest"},

{"type": "anthropic", "skill_id": "docx", "version": "latest"},

]

),

instructions=["You are a document specialist."],

markdown=True,

)

agent.print_response("Create a 3-slide presentation about AI trends")

View the docs to learn more about Agno's Claude Agent Skills support. Learn more about how Claude Skills work in the Anthropic documentation.

You can now use SQLite and MongoDB asynchronously with AsyncSqliteDb and AsyncMongoDb, enabling faster database operations and more efficient request handling in async workflows.

from agno.agent import Agent

from agno.db.sqlite import AsyncSqliteDb

agent = Agent(

db=AsyncSqliteDb(db_file="sessions.db")

)

response = await agent.arun("Process this request")

Now you can make iterative development faster and more cost-efficient by caching LLM responses. Set cache_response=True on your model to store responses and avoid redundant API calls, ideal for testing and refining workflows.

from agno.agent import Agent

from agno.models.openai import OpenAIChat

agent = Agent(

model=OpenAIChat(

id="gpt-4o",

cache_response=True

)

)

Learn more about response caching.

Keep context relevant and token usage low by limiting tool calls from history. The max_tool_calls_from_history parameter helps optimize performance for long-running conversations by keeping only the most recent tool interactions in context while maintaining complete history in your database.

from agno import Agent

agent = Agent(

max_tool_calls_from_history=3, # Only load last 3 tool calls

add_history_to_context=True

)

This is essential for production deployments where managing context windows and controlling costs are critical.

View docs on managing tool calls.

Quickly turn your PowerPoint presentations into actionable knowledge. The new PPTXReader class allows agents to extract slide text and build knowledge bases directly from presentations, making it ideal for enterprise document processing, presentation analysis, and RAG workflows with office documents.

from agno.agent import Agent

from agno.knowledge.knowledge import Knowledge

from agno.knowledge.reader.pptx_reader import PPTXReader

from agno.models.openai import OpenAIChat

knowledge = Knowledge()

knowledge.add_content(

path="quarterly_review.pptx",

reader=PPTXReader()

)

agent = Agent(

model=OpenAIChat(id="gpt-4o"),

knowledge=knowledge,

search_knowledge=True

)

See docs for more.

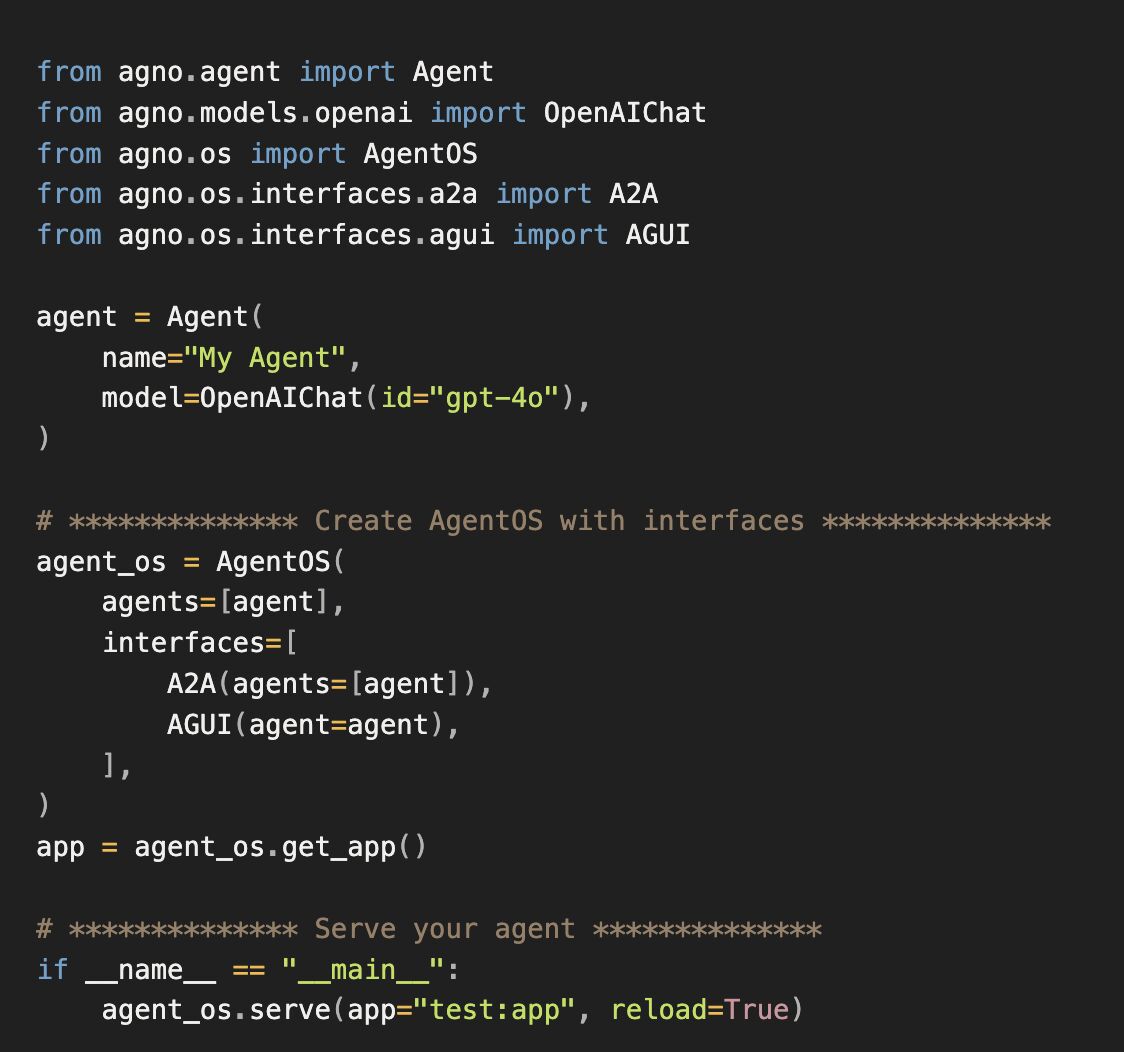

Enable seamless agent-to-agent communication and front-end connectivity. Agno agents can now interact directly with each other and connect to front-end interfaces through AgentOS using standardized A2A and AG-UI protocols.

Simply pass interfaces when creating your AgentOS to activate A2A and AG-UI, both of which can run simultaneously within the same instance. Learn more in the A2A docs and the AG-UI docs.

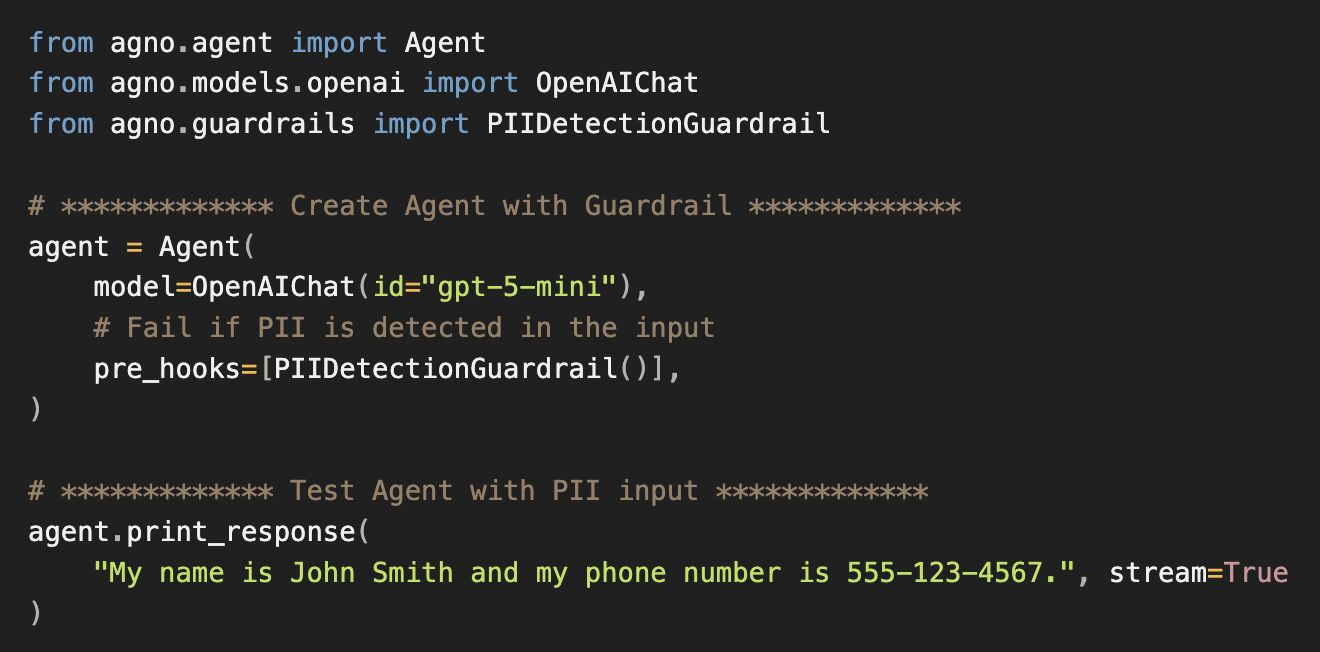

Keep your agents and their inputs secure with built-in guardrails that protect against PII leaks, prompt injections, jailbreaks, and NSFW content. Add guardrails with a single line of code by importing one and passing it as a pre_hook when creating your agent, or extend the BaseGuardrail class to build custom safeguards tailored to your needs.

Learn more in the documentation.